One Quick Way To Host A WebApp: Difference between revisions

No edit summary |

|||

| Line 173: | Line 173: | ||

I also have a `bin/backup` that describes how to backup. | I also have a `bin/backup` that describes how to backup. | ||

=== | === Containerization === | ||

I prefer using | I prefer using Linux containers for everything because they pins the environment but also because they retain app environments in their own worlds. Mediawiki, which this site is based on, is written in PHP and composer (a PHP package manager) just goes everywhere. With docker/podman, all that is contained in one place. | ||

Other languages don't do this as much. For example, Rust's `cargo` is quite well-contained in that it runs inside a user-context. So I build Rust programs in their own directory. I used to run Python programs in | Other languages don't do this as much. For example, Rust's `cargo` is quite well-contained in that it runs inside a user-context. So I build Rust programs in their own directory. I used to run Python programs in containers exclusively but with `mise` and `uv` it is possible to run them raw. I still prefer to run them in containers, though. I just have to remember to make them listen on a unique port, and to expose the port. | ||

=== | ==== Quadlets ==== | ||

I used to use Podman and then write a traditional systemd unit file to manage the container as if it were a service. I liked that approach because the fact that it was in podman was an implementation detail of the app. I could just as well have it a bare binary doing the same thing and I'd run the same `systemctl` command. | |||

I later discovered systemd quadlets: containers managed by systemd itself. These are quite nice since you write them in a format that is like a unit file and you can use your usual systemctl commands to manage them directly. Here's an example quadlet configuration. | |||

<syntaxhighlight lang="ini"> | |||

[Unit] | |||

Description=MediaWiki Container | |||

After=network-online.target | |||

Wants=network-online.target | |||

[Container] | |||

Image=localhost/wiki.roshangeorge.dev:latest | |||

ContainerName=rowik | |||

Environment=MEDIAWIKI_DB_TYPE=mysql | |||

PublishPort=127.0.0.1:8080:80 | |||

Volume=/mnt/r2/uploads:/var/www/html/images:Z | |||

AutoUpdate=registry | |||

[Service] | |||

Restart=always | |||

TimeoutStartSec=300 | |||

[Install] | |||

WantedBy=multi-user.target default.target | |||

</syntaxhighlight> | |||

One debug annoyance is that viewing quadlet startup logs is not straightforward if you have made an error in quadlet configuration. Instead of an error in your service, it is a syslog error on the unit file generator. This is because when a quadlet is configured like this, what happens is that systemd has a generator that converts it to a service file on the fly and then runs that, so if you have a configuration error here it isn't marked against the service and so you'll just get a 'service not found'. Regardless, I find quadlets nicer to manage than writing a run script and a systemd unit file around that script. | |||

==== Docker / Podman ==== | |||

The default thing I used to do was build the Docker image on the server and just run them there. When you do that, you can use docker/podman for process management or you can use systemd to manage your docker/podman commands. I prefer using systemd because then I don't have to remember which parts are managed with systemd and which ones with docker. | |||

One thing to note with podman is that you have to fully specify the name of parent images unless you configure the default to docker.io. Fully specifying the parent images is more portable in that you just copy the Dockerfile elsewhere and it will still build. Here's an example Dockerfile for my wiki. | |||

<syntaxhighlight lang="docker"> | |||

FROM docker.io/mediawiki:latest | |||

RUN apt-get update && apt-get install -y lua5.4 sendmail | |||

COPY ./apache.conf /etc/apache2/sites-available/000-default.conf | |||

WORKDIR /var/www/html | |||

COPY custom-php.ini /usr/local/etc/php/conf.d/custom-php.ini | |||

# Copy any additional directories (extensions, skins, etc.) | |||

COPY extensions/ ./extensions/ | |||

COPY robots.txt ./robots.txt | |||

RUN ln -sf images uploads | |||

COPY LocalSettings.php ./LocalSettings.php | |||

# update if possible (so migrations etc. can run) | |||

RUN php maintenance/update.php --quick | |||

# generate the sitemap (so that google can find sub-pages) | |||

RUN php maintenance/run.php generateSitemap --compress=no --identifier=sitemap | |||

RUN cp sitemap-index-sitemap.xml sitemap.xml | |||

</syntaxhighlight> | |||

===== Process Management ===== | |||

With docker/podman, you can just set them to restart or whatever whenever you like and things will stay perpetually running, but I like a single system for all my apps, whether docker or not, and so I use systemd to manage them. An example systemd file looks like this for a docker app: | With docker/podman, you can just set them to restart or whatever whenever you like and things will stay perpetually running, but I like a single system for all my apps, whether docker or not, and so I use systemd to manage them. An example systemd file looks like this for a docker app: | ||

| Line 220: | Line 284: | ||

While you'll want to symlink these into either `/etc/systemd/system/sub.roshangeorge.dev.service` or `~/.config/systemd/user/sub.roshangeorge.dev.service` if you want to keep them entirely home directory constrained, you can just leave the service unit file in your repo and have it symlinked in. Because your entire repo is on the host, you can just embed all of the host configs in your repo and then appropriately symlink them. | While you'll want to symlink these into either `/etc/systemd/system/sub.roshangeorge.dev.service` or `~/.config/systemd/user/sub.roshangeorge.dev.service` if you want to keep them entirely home directory constrained, you can just leave the service unit file in your repo and have it symlinked in. Because your entire repo is on the host, you can just embed all of the host configs in your repo and then appropriately symlink them. | ||

==== Registry ==== | |||

Originally, I used to just build the docker images on the server itself. This was simply out of convenience. After some time, it got to be a bit of a hassle and I found myself doing the annoying thing of just writing code on the server and building the image there. Well, that's a bit dopey, but it's very easy to fix. You can run a registry container on the server and just push images to it when you're ready. | |||

<syntaxhighlight lang="ini"> | |||

ubuntu@kant:~$ cat ~/.config/containers/systemd/registry.container | |||

[Unit] | |||

Description=Container Registry | |||

After=network-online.target | |||

Wants=network-online.target | |||

[Container] | |||

Image=docker.io/library/registry:2 | |||

PublishPort=5000:5000 | |||

Volume=registry-data:/var/lib/registry | |||

AutoUpdate=registry | |||

[Service] | |||

Restart=always | |||

TimeoutStartSec=900 | |||

[Install] | |||

WantedBy=default.target | |||

</syntaxhighlight> | |||

That's a systemd quadlet that runs the registry. I do the usual `docker build --platform=linux/amd64 .` on my Mac and then the `docker tag registry.blahblah.com` and then `docker push` to it and it all works just like you'd expect. | |||

== Conclusion == | == Conclusion == | ||

Revision as of 21:23, 18 November 2025

I host a couple of web-apps on a VPS and it's gotten to the point that I'm fairly quick at doing it. However, if you haven't done this before, there are a lot of fiddly bits and it doesn't make much sense. I'll write it down here since I easily forget.

This is mostly driven by the problems I've run into doing other things, and the specific considerations of how I like to host stuff (get a weak VPS and put everything on it) because I don't get that much traffic.

Considerations

- Things should be replicable given software changes over time

- Things should be easy to edit and update

- The iteration cycle should be short

These are all sort of important because I used to have everything on the machine raw and over time my 10 year box accumulated so much bespoke fixing that I couldn't easily recreate it, resulting in the death of this other blog I used to have but also I don't want to deal with too much infra (say a container registry and kubernetes and so on). Others may have other concerns but this balance feels right for me.

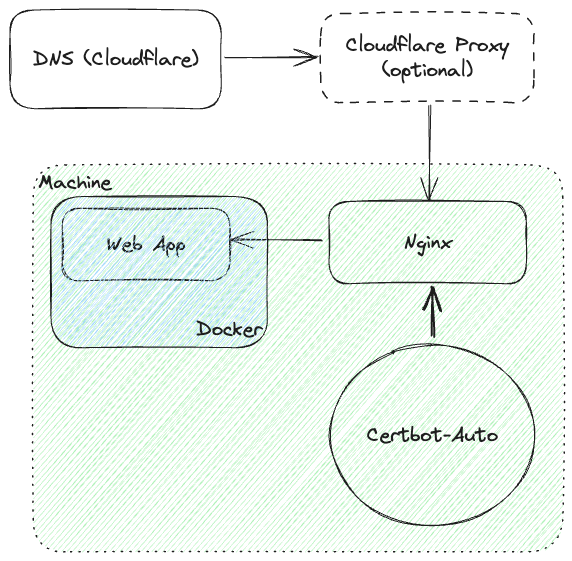

The Design

- I use Namecheap for domains ever since Google Domains went down

- I use Cloudflare for DNS ever since Google Domains went down

- I use Cloudflare as a web proxy

- I use Cloudflare R2 for persistent storage

- I use nginx as a reverse proxy

- I use certbot to get a Let's Encrypt SSL cert

- I run each app in Docker

- I keep them running using systemd

- I use a single MySQL and a single PostgreSQL on the same host

- I build my apps on the host

- I use Cloudflare Tunnel to self-host on my home hardware

The idea is that nothing should lock me in too hard because I will likely not pay enough attention to it and the ecosystem will change around me and then I'll be stuck. Each bit here acts as middleware and is easily substitutable.

The rawest thing is the SQL DB and the app itself. We wrap that in Docker so environments are fixed. We wrap that in systemd so restarting is easy. We point nginx to that so that we can have a high-performance front-end serve static files and so on. We wrap that in Cloudflare so that we can get speed. And then we need DNS and a domain to get things done.

R2 for storage means we've got all the data somewhere nice and with it mounted locally we can just dump DB backups to it.

If each piece went away it would be straightforward to just disable it and move one step back. Nothing is catastrophic.

The Environment

Domain

I usually have a domain already but otherwise I buy it on Namecheap because it's straightforward to use. It's pretty much only good as a domain registrar and I don't use any of the other features with it. Any registrar is fine here.

DNS

The first thing I do is create a Cloudflare zone and point the NS records to Cloudflare. CF is way better at managing DNS and has all sorts of features that make it worthwhile. Then, once CF is set up, I point the record at my host without proxying. This does reveal the underlying IP but that's fine because no one is trying to hurt me.

I do this primarily to simplify HTTP-based certbot later. I've also done Cloudflare DNS based certbot and it's actually just as easy, but you have to create a cloudflare token for the zone and put it on the host.

But an additional benefit is that at this stage if you put a web server on the host then you're functioning as raw as can be. You can run (in a temporary directory with a simple `index.html`):

python3 -m http.server 10000

And verify that you can access it at http://sub.roshangeorge.dev:10000 , and you know you're set up for the world. You don't have HTTPS but what are you securing anyway. If you can't access things at this point, you should try via the IP (which means DNS is the issue) and then from the host itself (which may mean that you didn't bind to the external IP or 0.0.0.0 if you're promiscuous) and then you should check your firewall.

Nginx

Nginx is a good web server to use as a reverse proxy. Some people use Caddy since it has Let's Encrypt built-in for HTTPS but this works for me so I use it. k3s and friends use Traefik and that works well too. In the LLM age, one advantage is that Nginx is well-documented and has been around for a while with a consistent configuration format.

As usual I make one of these simple config files in `/etc/nginx/sites-available/sub.roshangeorge.dev` and symlink from `/etc/nginx/sites-enabled/sub.roshangeorge.dev` to it.

server {

server_name sub.roshangeorge.dev;

location /uploads/ {

alias /mnt/r2/uploads/; # Serve static files from this directory

expires max;

}

location / {

proxy_pass http://localhost:10000; # Proxy pass to app

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

}

}

This is roughly the same each time. I want some things to be static, usually favicons and robots.txt and llms.txt and stuff like that. And the rest I'm going to send over to the app which is going to run at that port.

I perform the cargo-cult ritual of restarting nginx after each of these things, though technically a `reload` should do the trick.

sudo systemctl restart nginx

Once this is all running, we should be able to go to http://sub.roshangeorge.dev and it should correctly show what the app would show (which in our case is Python's default `http.server`). If I have websockets there are a few more incantations around the `Upgrade` header.

Certbot

For SSL, I just run certbot on the machine. When you run certbot for the first time it will prompt you to select which host you want to configure. Or you could just tell it yourself.

sudo certbot --nginx -d sub.roshangeorge.dev

As usual, I do the ritual restart of the nginx. Then we should be able to access https://sub.roshangeorge.dev over SSL and we're pretty happy about the environment.

If you check your `sites-available/my-site` you'll see that certbot has added the certificates in and everything. It also adds a recurring job to refresh the certificates.

Cloudflare Web Proxy

At this point if you want, you can go back and switch the DNS settings on Cloudflare to start proxying. If you then access your site it should just work. Doing it earlier makes debugging hard, but doing it now should just work.

Cloudflare R2

I also like to mount an R2 bucket so that I have lots of storage and a backup target to hit.

s3fs r2-storage /mnt/r2 -o rw,allow_other,umask=000,passwd_file=/etc/fuse-r2/passwd-s3fs,url=https://userspecific.r2.cloudflarestorage.com,use_path_request_style,nomixupload,dev,suid

You'll have to make sure your `/etc/fuse.conf` has the following in order to allow ordinary users access

user_allow_other

And then you can mount as one user who has access to both MySQL and your R2 and they can go around backing up stuff to R2!

Cloudflare Tunnel

You can do all of this on your home hardware if you feel like it. The way I do this is that I have an Epyc-based home server running at home on my Google Fiber connection. I then use Cloudflare tunnel to link traffic between Cloudflare's network and that home server. This takes the whole dynamic DNS business out of the picture and you can just push traffic through the tunnel.

One downside is that if you lose power at home, your site is going to go down. But you can use Cloudflare's Always On to get cached versions of your pages out of the Internet Archive. That's good enough for me since I can bring things up at a later date, and switching off this is just a quick DNS swap to an externally visible host.

The App

The App Itself

The first thing I try to do is get the app running one way or the other. This part is easy and I just iterate locally on my dev machine (a Macbook). Once I've got it running, I try to encode that state into a Docker container. I use Orbstack to develop on MacOS because it's far more convenient than Docker proper.

This is not-technically required, but since I have low usage, I can pack more things onto a single box with Docker since I don't have to worry about environments clobbering each other. It's also not usually too hard.

Database

If you're writing a personal application, SQLite is the best tool to reach for. It's a single file and it's easy to deal with. If you're also hosting other things, like I am with Mediawiki, it is worth running your distribution's default MySQL/PostgreSQL on the host itself. If you're going to use Docker later, remember that users will need to be granted permissions to log in from the Docker/Podman network too (which is often 10.x.x.x).

Hosting Your Code

You can build your app and only deploy the artifacts, but I've found it much easier to just push the source code and build the app on the production host. The way I do this is perhaps unorthodox or perhaps standard.

First, create your app directory:

mkdir ~/sub.roshangeorge.dev

Then make it into a git repo:

cd ~/sub.roshangeorge.dev git init .

Then on your dev machine, add it as a remote:

git remote add deploy user@host:~/sub.roshangeorge.dev git push deploy main

Now, this would seem to be enough, but you can't push to a branch that is checked out. So what I do is that I only ever have 'releases' checked out. And a release is just a tag. So on my local machine, I might do.

git tag release-20250313 git push deploy --tags

And then on the host I check out the relevant tag when I want to run that:

git checkout release-20250313

This little trick lets me just `git push` when I want to deploy. If you like, you can put in post-commit hooks and all that, but I haven't found them useful. I just ssh on and build.

Building

I like to have, in each of my repos, a `bin/build` script. For the ones with Dockerfiles these look as simple as:

#!/usr/bin/env bash podman build -t sub.roshangeorge.dev:latest .

A convenience thing I have throughout is that I refer to the app everywhere by some canonical subdomain. The docker tag is the subdomain, the nginx site is the subdomain name, the code directory is the subdomain. Everything is the subdomain.

I also have a `bin/backup` that describes how to backup.

Containerization

I prefer using Linux containers for everything because they pins the environment but also because they retain app environments in their own worlds. Mediawiki, which this site is based on, is written in PHP and composer (a PHP package manager) just goes everywhere. With docker/podman, all that is contained in one place.

Other languages don't do this as much. For example, Rust's `cargo` is quite well-contained in that it runs inside a user-context. So I build Rust programs in their own directory. I used to run Python programs in containers exclusively but with `mise` and `uv` it is possible to run them raw. I still prefer to run them in containers, though. I just have to remember to make them listen on a unique port, and to expose the port.

Quadlets

I used to use Podman and then write a traditional systemd unit file to manage the container as if it were a service. I liked that approach because the fact that it was in podman was an implementation detail of the app. I could just as well have it a bare binary doing the same thing and I'd run the same `systemctl` command.

I later discovered systemd quadlets: containers managed by systemd itself. These are quite nice since you write them in a format that is like a unit file and you can use your usual systemctl commands to manage them directly. Here's an example quadlet configuration.

[Unit]

Description=MediaWiki Container

After=network-online.target

Wants=network-online.target

[Container]

Image=localhost/wiki.roshangeorge.dev:latest

ContainerName=rowik

Environment=MEDIAWIKI_DB_TYPE=mysql

PublishPort=127.0.0.1:8080:80

Volume=/mnt/r2/uploads:/var/www/html/images:Z

AutoUpdate=registry

[Service]

Restart=always

TimeoutStartSec=300

[Install]

WantedBy=multi-user.target default.target

One debug annoyance is that viewing quadlet startup logs is not straightforward if you have made an error in quadlet configuration. Instead of an error in your service, it is a syslog error on the unit file generator. This is because when a quadlet is configured like this, what happens is that systemd has a generator that converts it to a service file on the fly and then runs that, so if you have a configuration error here it isn't marked against the service and so you'll just get a 'service not found'. Regardless, I find quadlets nicer to manage than writing a run script and a systemd unit file around that script.

Docker / Podman

The default thing I used to do was build the Docker image on the server and just run them there. When you do that, you can use docker/podman for process management or you can use systemd to manage your docker/podman commands. I prefer using systemd because then I don't have to remember which parts are managed with systemd and which ones with docker.

One thing to note with podman is that you have to fully specify the name of parent images unless you configure the default to docker.io. Fully specifying the parent images is more portable in that you just copy the Dockerfile elsewhere and it will still build. Here's an example Dockerfile for my wiki.

FROM docker.io/mediawiki:latest

RUN apt-get update && apt-get install -y lua5.4 sendmail

COPY ./apache.conf /etc/apache2/sites-available/000-default.conf

WORKDIR /var/www/html

COPY custom-php.ini /usr/local/etc/php/conf.d/custom-php.ini

# Copy any additional directories (extensions, skins, etc.)

COPY extensions/ ./extensions/

COPY robots.txt ./robots.txt

RUN ln -sf images uploads

COPY LocalSettings.php ./LocalSettings.php

# update if possible (so migrations etc. can run)

RUN php maintenance/update.php --quick

# generate the sitemap (so that google can find sub-pages)

RUN php maintenance/run.php generateSitemap --compress=no --identifier=sitemap

RUN cp sitemap-index-sitemap.xml sitemap.xml

Process Management

With docker/podman, you can just set them to restart or whatever whenever you like and things will stay perpetually running, but I like a single system for all my apps, whether docker or not, and so I use systemd to manage them. An example systemd file looks like this for a docker app:

[Unit]

Description=App Container

After=network.target

[Service]

Type=simple

User=ubuntu

ExecStart=/usr/bin/podman run --name sub_roshangeorge_dev --mount type=bind,source=/mnt/r2/files,target=/var/www/files -e ENV="var" -p 127.0.0.1:8080:80 sub.roshangeorge.dev:latest

ExecStop=/usr/bin/podman stop sub_roshangeorge_dev

ExecStopPost=/usr/bin/podman rm sub_roshangeorge_dev

Restart=always

[Install]

WantedBy=multi-user.target

But you could just as well have a simple non-docker version (this one is a mail server not a web-app but the idea applies)

[Unit]

Description=Superheap RSS Service

After=network.target

[Service]

Type=simple

User=ubuntu

ExecStart=/home/ubuntu/superheap/target/release/superheap serve -c /home/ubuntu/rss/superheap.json

Restart=always

RestartSec=5

WorkingDirectory=/home/ubuntu

[Install]

WantedBy=multi-user.target

While you'll want to symlink these into either `/etc/systemd/system/sub.roshangeorge.dev.service` or `~/.config/systemd/user/sub.roshangeorge.dev.service` if you want to keep them entirely home directory constrained, you can just leave the service unit file in your repo and have it symlinked in. Because your entire repo is on the host, you can just embed all of the host configs in your repo and then appropriately symlink them.

Registry

Originally, I used to just build the docker images on the server itself. This was simply out of convenience. After some time, it got to be a bit of a hassle and I found myself doing the annoying thing of just writing code on the server and building the image there. Well, that's a bit dopey, but it's very easy to fix. You can run a registry container on the server and just push images to it when you're ready.

ubuntu@kant:~$ cat ~/.config/containers/systemd/registry.container

[Unit]

Description=Container Registry

After=network-online.target

Wants=network-online.target

[Container]

Image=docker.io/library/registry:2

PublishPort=5000:5000

Volume=registry-data:/var/lib/registry

AutoUpdate=registry

[Service]

Restart=always

TimeoutStartSec=900

[Install]

WantedBy=default.target

That's a systemd quadlet that runs the registry. I do the usual `docker build --platform=linux/amd64 .` on my Mac and then the `docker tag registry.blahblah.com` and then `docker push` to it and it all works just like you'd expect.

Conclusion

To be honest, I've found this entire thing quite straightforward to use and the reason I use it is that few of the parts are integrally linked to others. That makes it easy to just `ssh` on and debug things. Each piece just does the one bit. And in a world with no Cloudflare, it would all stay the same (just my DNS would be from some other provider, and I'd store stuff on-host). I don't specifically need docker or anything, it's just one piece of the puzzle.

Hopefully, in solving my "the environment has changed" problem I haven't introduced anything new and novel to my universe. For the couple of years I've hosted using this solution.