Blog/2025-12-01/Grounding Your Agent: Difference between revisions

No edit summary |

→Formware Repair Tool: fix typo |

||

| Line 13: | Line 13: | ||

At first, I thought I'd try out just describing the model I wanted and have ChatGPT generate it for me by writing a Python script, which to its credit it gamely attempted. The resulting file was full of self-intersections and so on and to even print it I had to use the [https://www.formware.co/onlinestlrepair Formware Online STL Repair Tool] which, to its credit, made a fairly decent model out of that structure. | At first, I thought I'd try out just describing the model I wanted and have ChatGPT generate it for me by writing a Python script, which to its credit it gamely attempted. The resulting file was full of self-intersections and so on and to even print it I had to use the [https://www.formware.co/onlinestlrepair Formware Online STL Repair Tool] which, to its credit, made a fairly decent model out of that structure. | ||

I did try prompting ChatGPT to fix up the model but as is typical of this kind of situation it found itself only producing more crooked models - ones that | I did try prompting ChatGPT to fix up the model but as is typical of this kind of situation it found itself only producing more crooked models - ones that even past Formware's 'fixing' bore no resemblance to the original goal. | ||

== Grounding == | == Grounding == | ||

Latest revision as of 06:01, 4 December 2025

Julie and I got a Bambu Studio recently and it's been fairly useful, providing us with a cover for some balcony lights, and a little holder for a toilet-reading book. These are models you can find online that other people have kindly provided. But one thing I've wanted is to be able to mount our Eufy E21 baby monitor at an angle so that it can look into the baby bed that is on the floor.

ChatGPT[edit]

Like most things I do these days, I reached for an LLM. ChatGPT has pretty good knowledge of how to use Bambu Studio etc., but a 3D STL file is just a computer vector specification for a shape. LLMs are notoriously bad at creating representations of shapes, but in my experience they are pretty good programmers and they're pretty good at checking their work and modifying what they have.

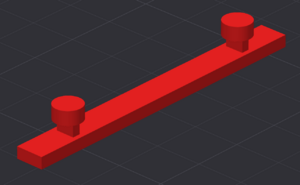

The most minimal object I wanted to create first was a little bar to check dimensions. I wanted it to fit in the mounting holes on the E21's base and slide in. Then if that worked, I'd take the bar out and use the resulting dimensions to configure a full build that involved a leaning ramp for the E21.

Formware Repair Tool[edit]

At first, I thought I'd try out just describing the model I wanted and have ChatGPT generate it for me by writing a Python script, which to its credit it gamely attempted. The resulting file was full of self-intersections and so on and to even print it I had to use the Formware Online STL Repair Tool which, to its credit, made a fairly decent model out of that structure.

I did try prompting ChatGPT to fix up the model but as is typical of this kind of situation it found itself only producing more crooked models - ones that even past Formware's 'fixing' bore no resemblance to the original goal.

Grounding[edit]

The typical approach at this point with an LLM is to provide it grounding information so that it can verify how well the final result is. In this case, I had it write a check_mesh.py file that could verify if the volume was well-formed by whatever criteria we cared about and then let it have the run of the house. Early LLM agents would go around modifying the test file, but by now they've gotten quite good and with this grounding, codex running gpt-5.1-codex-max was able to produce a workable model that didn't have any of the odd self-intersection problems and so on.

I'm pretty early into trying out 3-D printing things for our own convenience, so I'm hoping to find better ways to build these things as time goes by, but one thing that's nice to see is that the classical approach worked:

- Have an LLM write a check program

- Have the LLM work on the problem until the check program passes

It's pretty trivial, and practically everyone does this these days, but it's magical when it works across so many different fields.