LLM Conversational Level: Difference between revisions

No edit summary Tag: Reverted |

Tag: Undo |

||

| Line 26: | Line 26: | ||

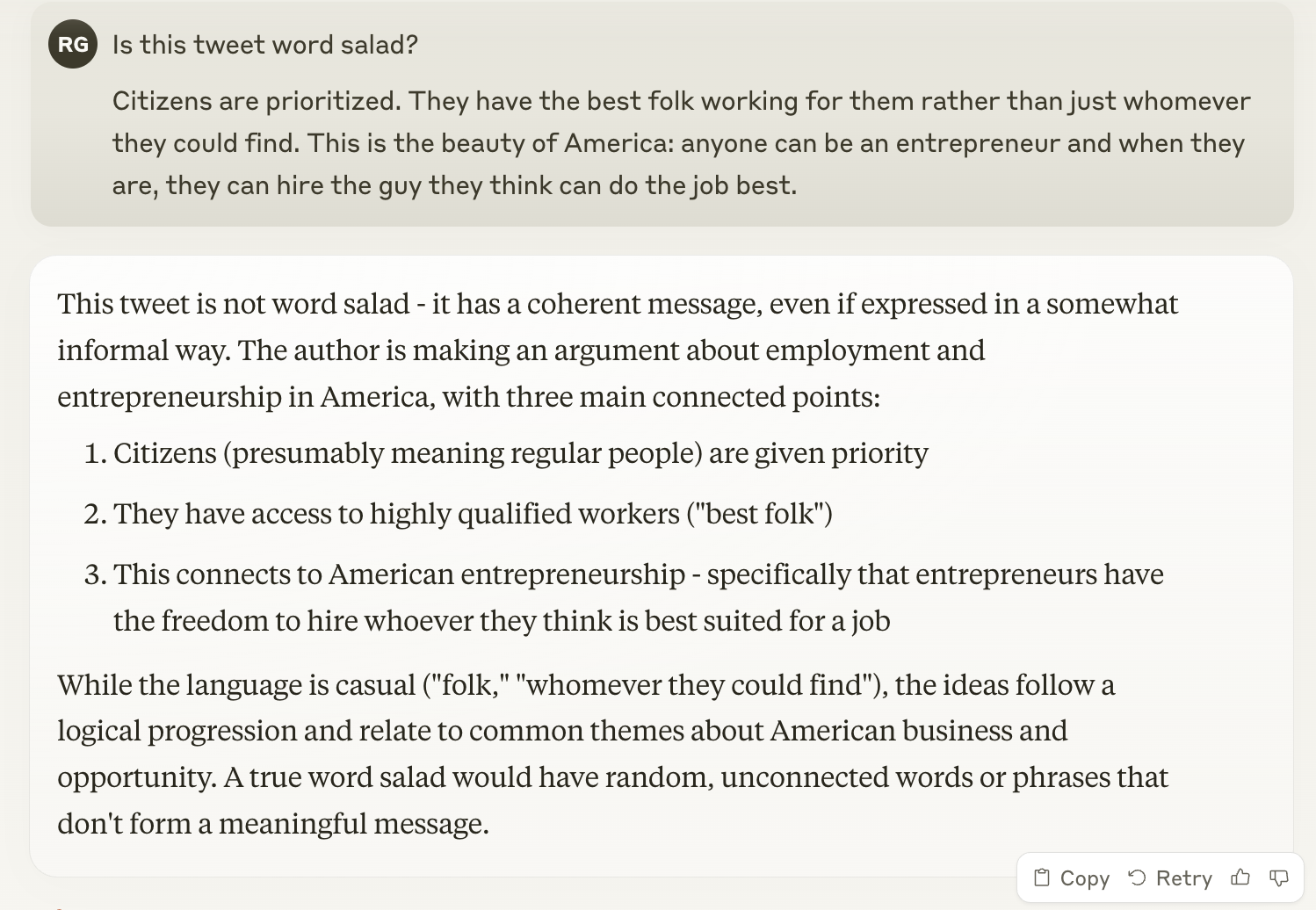

[[File:Screenshot of Claude 3.5 Sonnet interpreting a tweet by arjie.png|frame|center|This is a pretty big commercial model so it's not a surprise it gets it right]] | [[File:Screenshot of Claude 3.5 Sonnet interpreting a tweet by arjie.png|frame|center|This is a pretty big commercial model so it's not a surprise it gets it right]] | ||

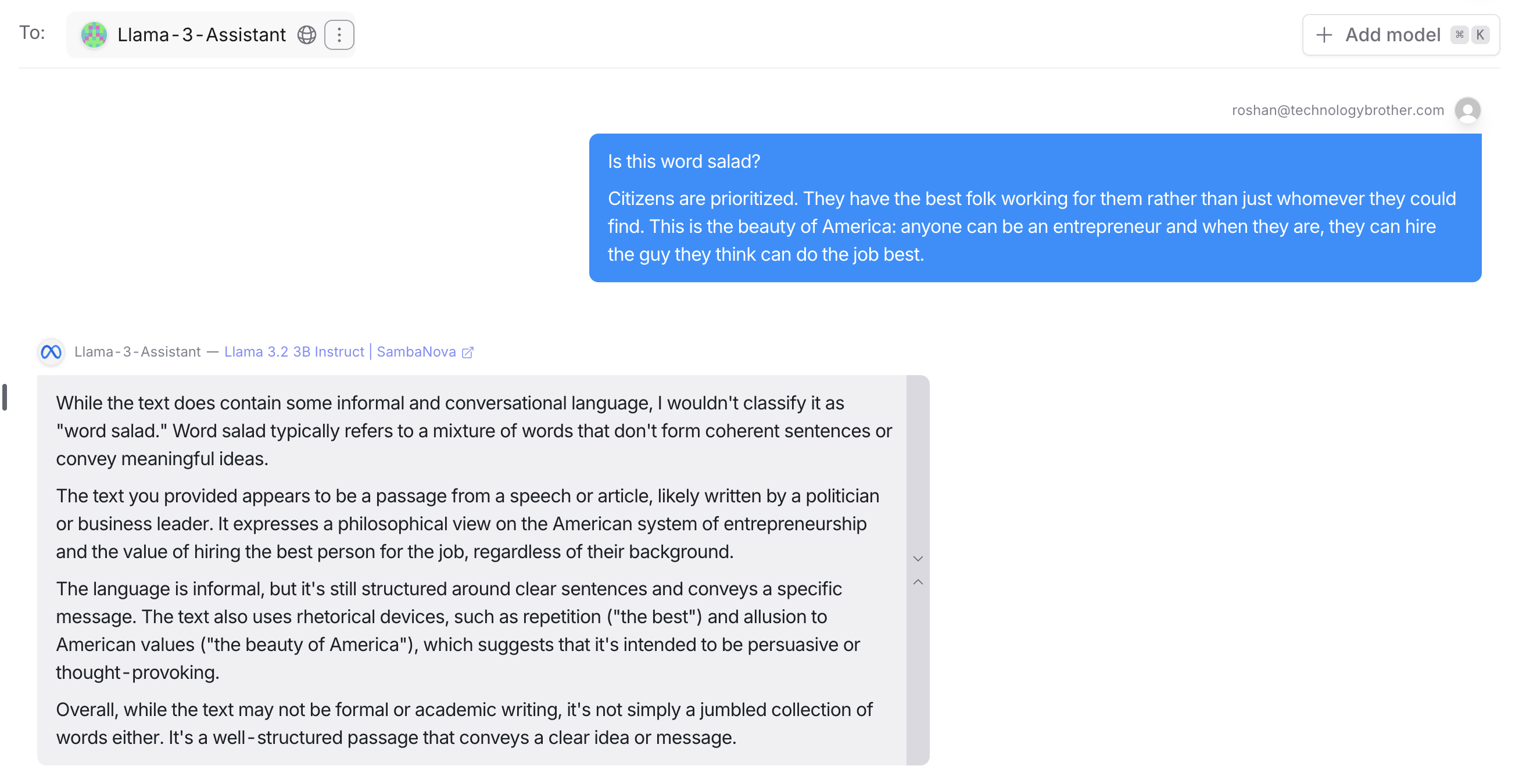

[[File:Screenshot of Llama 3.2 - 3B interpreting a tweet by arjie.png|frame|center|This Llama 3.2 is quite small, having only 3B parameters (which is still large compared to the GPT-2 1.5B model)]] | [[File:{{#setmainimage:Screenshot of Llama 3.2 - 3B interpreting a tweet by arjie.png}}|frame|center|This Llama 3.2 is quite small, having only 3B parameters (which is still large compared to the GPT-2 1.5B model)]] | ||

Based on this, we can safely conclude that the text has an LCL of at most 3 billion. We must also conclude that the person unable to comprehend the original tweet has an LCL lower than 3 billion as well. | |||

== Footnotes == | == Footnotes == | ||

<references /> | <references /> | ||

[[Category:Concepts]] | |||

Revision as of 21:52, 2 January 2025

The LLM Conversational Level (LCL) of a piece of text is the size of the smallest general-purpose LLM that can correctly decode the writer's intention in the text, given that mainstream larger LLMs also concur in the meaning. Texts where the writer's intention has changed or where the writer's intention cannot be decoded by any LLM have an undefined LCL.

Conversationally, the LCL of an individual is bounded above by the LCL of a text that that individual cannot decode.

Roshan George @arjie Replying to @jstephencarter

Citizens *are* prioritized. They have the best folk working for them rather than just whomever they could find. This is the beauty of America: anyone can be an entrepreneur and when they are, they can hire the guy they think can do the job best.

Jan 2, 2025[1]

Stephen Carter @jstephencarter Replying to @arjie

Silly word salad. I can’t hire an eight-year-old. I can’t hire certain types of criminals. It’s outrageous that I can hire a non-citizen when they are American citizens capable of doing the job, and there absolutely are.

Jan 2, 2025[2]

As an example, the latter tweet refers to the former as "silly word salad", implying that it is a "confused or unintelligible mixture of seemingly random words and phrases" (as described by Wikipedia). If an LLM were to be able to decode the text it would imply that it is not, in fact, unintelligible, and consequently it would imply that the reader who considers it word salad has an LCL bounded by that of the text.

Based on this, we can safely conclude that the text has an LCL of at most 3 billion. We must also conclude that the person unable to comprehend the original tweet has an LCL lower than 3 billion as well.

Footnotes

- ↑ Roshan George [@arjie] (Jan 2, 2025). "Citizens *are* prioritized. They have the best fol..." (Tweet) – via Twitter.

- ↑ Stephen Carter [@jstephencarter] (Jan 2, 2025). "Silly word salad. I can't hire an eight-year-old. ..." (Tweet) – via Twitter.