Blog/2026-01-06/Is The Internet Dead?

The Dead Internet theory claims that all Internet content is now posted by bots and so on in order to control the population and whatnot. I don't think the population is particularly controlled, but I do think that a lot of re-runs happen and I think that's structural.

Does This Happen?

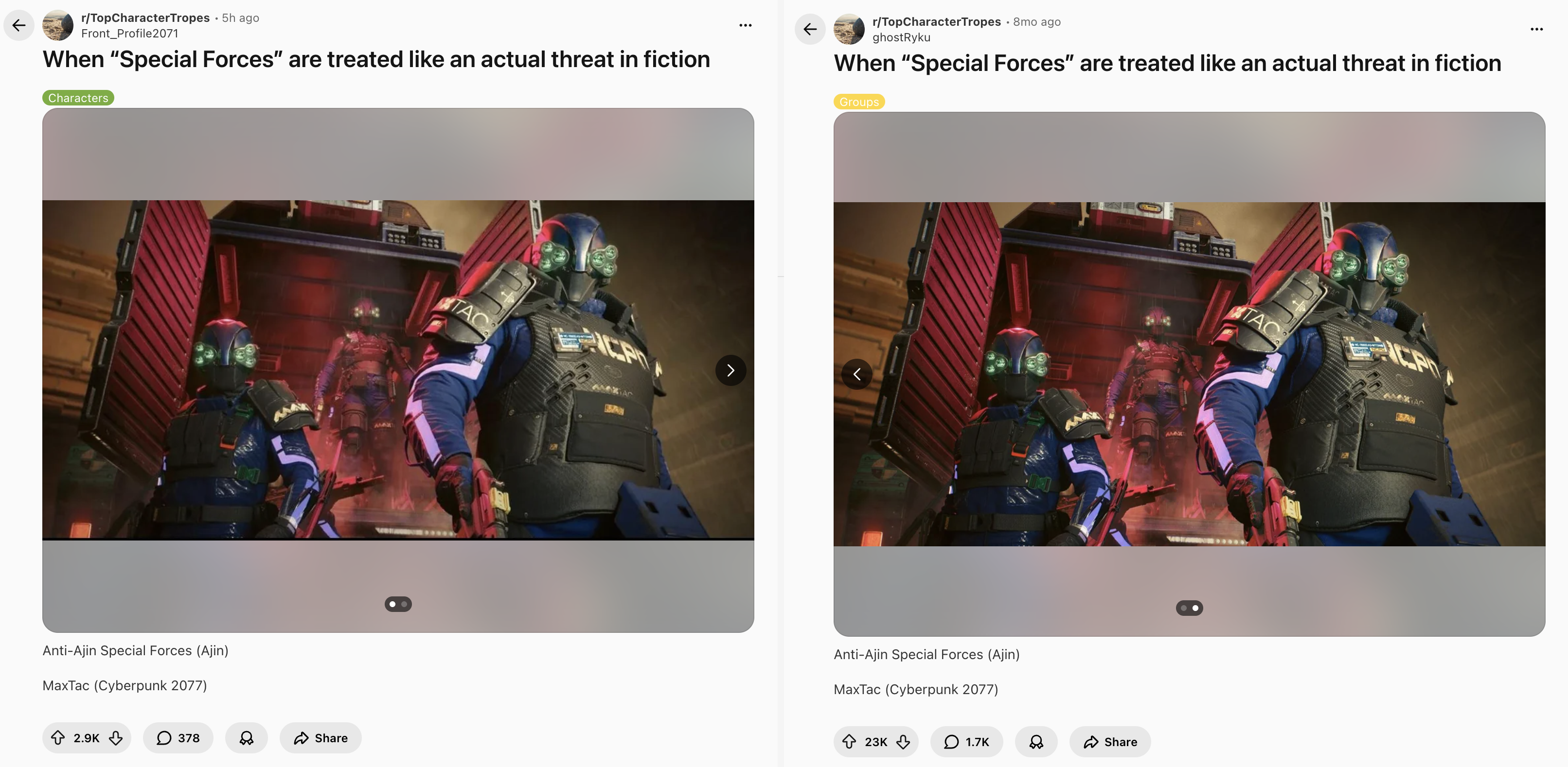

[edit]Reddit is a pretty big social network and it's pretty public so you can pick out a top post on the front page and try to find its origin. All the ones that are temporally relevant (Tim Walz and the Autism Scams, Trump and Venezuela, Trump and Greenland) are unlikely to be duplicates, but if I pick out one that's content-based, it shows up pretty fast.

Take a look at these two Reddit posts. I'll post them below for you to look at next to each other:

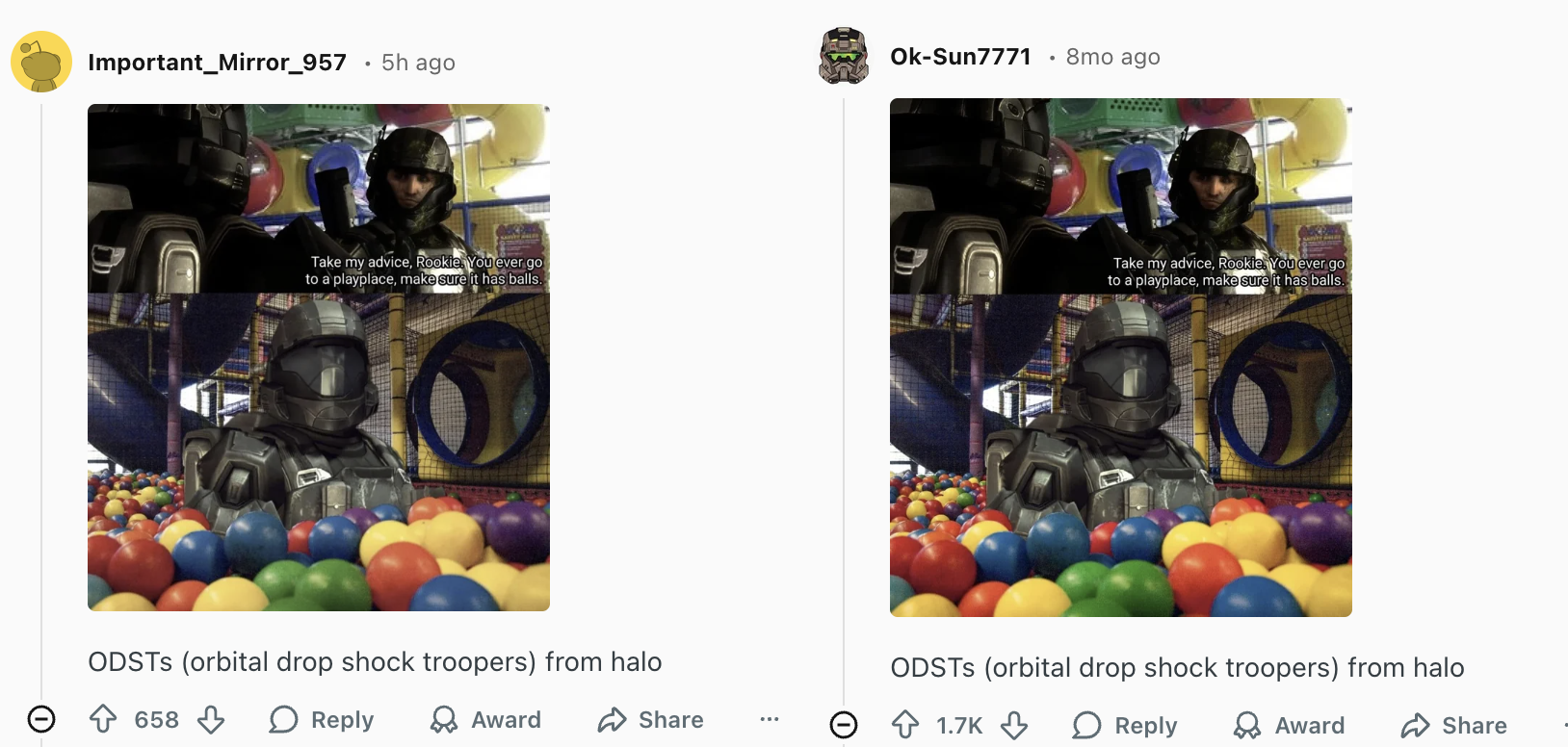

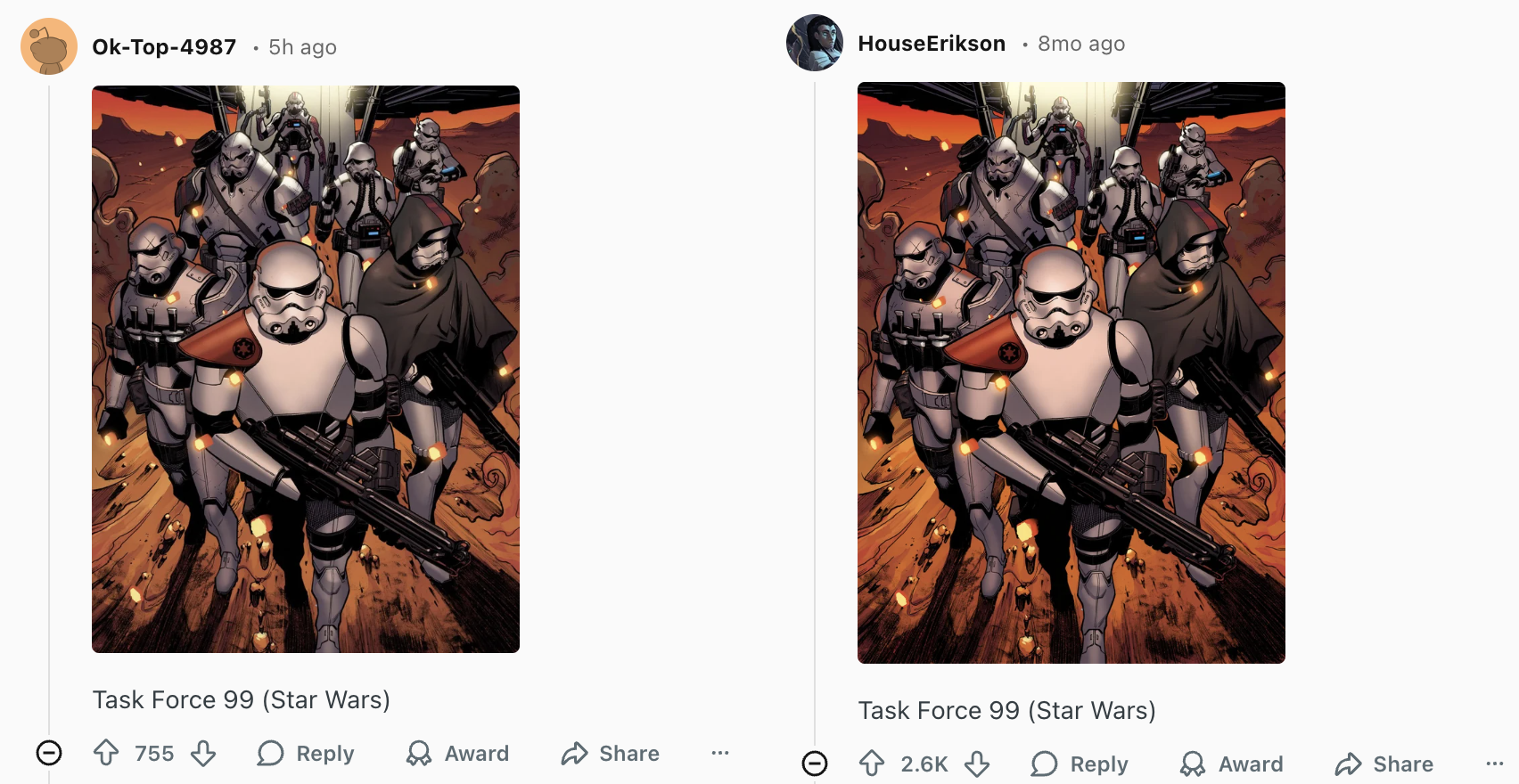

Okay, so the post is real but that's not a big "Dead Internet" thing. You'll see, however, that the comments are pretty similar too:

and you can find that similarity replicated

On Reddit, usernames can either be chosen or generated as defaults. You'll see that the new usernames are all generated: Word Word Number is the format that Reddit uses. So certainly it's true that one style of post is a duplicate of old posts made by humans. This makes sense. It's the same pressure as studios making remakes or sequels/prequels vs. new IP. A demonstrated well-performing post is easier to redo than trying to create a new one.

Okay, so it clearly does happen and is pretty easy to find. In this case, everything was the same, text, images, comments. I think that's often going to be the case. When you have a large audience, only some fraction are going to see the thing, so repeated exposure doesn't risk saturation.

As for the subreddit or Reddit itself, these things are clearly highly engaged-with as far as the human users are concerned. The 1% rule means that the majority of human users are consuming the content, so these repetitive comments and posts aren't a big deal so long as you don't hit viewer saturation.

But what's in it for the bots?

Why Do This?

[edit]It's pretty widely known that the best way to get a seemingly honest review is to Google "Product Name reddit" and see people talk about it. This is likely true for other social networks as well. People want to see who thinks something is useful and they want to see it in the context where the people are doing so incidentally, i.e. it's not a car mechanic recommending car mechanics.

In general, I lean towards distributed incentive response for an explanation for behavior rather than top-down controls. I think it's less likely that The Government Is Controlling Our Thoughts Through Fake Reddit Accounts than that "there are unscrupulous social media marketing services that will create positive press for your product if you pay them".

Why Copy Comments?

[edit]Online communities have had a bot problem for a long time. The price of anonymity is Sybil vulnerability. So the timeline goes like this:

- An anonymous community forms

- Once it is sufficiently popular, it is useful to influence it

- The natural thing to do is to create sockpuppets and use them

- The community attempts to solve this by requiring tenure (many subreddits require this)

- The natural thing to do is to create dormant sockpuppets

- The community adapts by inspecting accounts to see if they "look human": interact with a variety of posts, express opinions about unimportant things, and so on

At this point, one is faced with the strong challenge of generating human-looking comments. LLMs don't do this well: they have a stereotypical view of how online commenters speak which is stilted and very "hashtag selfie lol". But a pretty trivial approach is to pick a human account and simply replicate all their comments. So long as view saturation hasn't occurred, it is unlikely that human will see the reposts of his comments, and the mildest melding of a few accounts will reduce the chance that it looks exactly like another.

How To Deal With Them

[edit]This is a hard thing, and where we are is less a destination and more one point on the path as the various participants adapt to each others' approaches. Tenure, history, and so on have been ineffective but some communities (/r/hardwareswap or /r/homelabsales) require buyer and seller confirm. One can Sybil in a few such, but because of the smaller numbers of people, these rings are easier to detect.

Reddit users attempt to crowdsource something at /r/TheseFuckingAccounts/ but ultimately, these bots aren't working counter to the platform's objectives and human obsession only takes things so far.

Machine-runnable methods like shadowbanning (hiding someone from everyone else) or heavenbanning (giving someone a lot of upvotes but hiding them from others) work temporarily but most bot rings will do the natural thing and use anonymous or innocent-seeming accounts to test visibility.

Metafilter has a one-time $5 fee as a gate that raises the price for a Sybil attack, and because there are no refunds on banning, it might make it cost-prohibitive.

In the end, all of these things happen because we're trying to scale trust. Maybe trust is not scalable.